Table of Contents

hide

Source: LinkedIn – Link

What is Data Science?

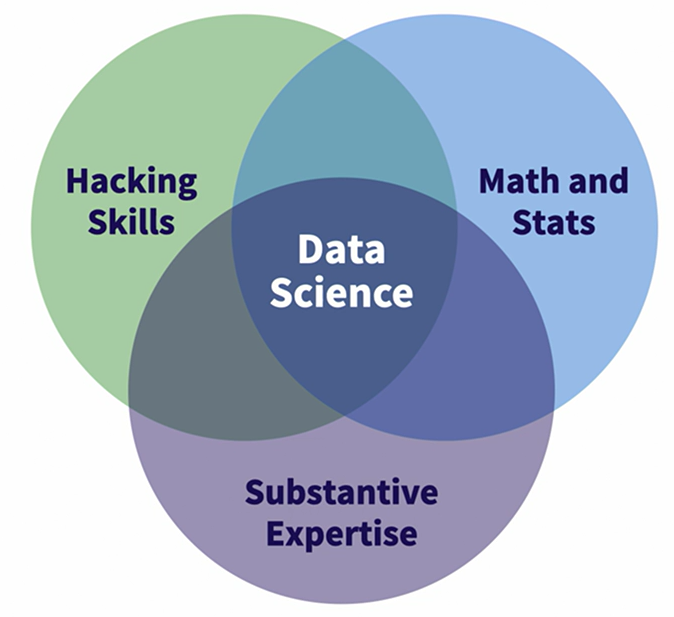

The data science Venn diagram

- Introduction to Data Science: Drew Conway proposed in 2013 that combining hacking skills, math and statistics, and substantive expertise gives rise to data science, a field revolutionizing technology and business.

- Importance of Hacking Skills:

- Creativity in dealing with novel data sources like social media, images, video, and streaming data.

- Essential programming languages: Python, R, C, C++, Java, SQL (Structured Query Language).

- Mention of TensorFlow, an open-source library for deep learning, revolutionizing data science.

- Mathematical Elements of Data Science:

- Relevant mathematical concepts include probability, linear algebra, calculus, and regression.

- Mathematics aids in choosing procedures aligned with the data and question, facilitating informed choices and problem diagnosis.

- Substantive Expertise:

- Each domain or topic area in data science has unique goals, methods, and constraints.

- Understanding what adds value in a specific domain and implementing actionable insights is crucial.

- Integration of Components:

- The combination of hacking or programming, math and statistics, and substantive expertise forms the foundation of data science.

- Together, these components create a synergistic effect, making data science more than just the sum of its parts.

The data science pathway

- Introduction to Data Science Pathway:

- Data science projects require planning and coordination, likened to walking down a pathway with each step bringing you closer to your goal.

- Planning the Project:

- Define goals to know the desired outcomes.

- Organize resources, including computers, software, data access, and personnel.

- Coordinate team efforts

- Schedule the project to manage time effectively.

- Data Wrangling:

- Gather raw data from sources like open data, public APIs.

- Clean the data to fit paradigms: program, application.

- Explore the data through visualizations and numerical summaries.

- Refine the data based on exploration, recategorizing cases or combining variables.

- Modeling:

- Create model: statistical models such as linear regression, decision trees, or deep learning neural networks.

- Validate the model to ensure generalization to new datasets.

- Evaluate the model’s fit, return on investment, and usability.

- Refine the model based on evaluations and adjust parameters.

- Applying the Model:

- Present the model’s findings to decision-makers, clients, or stakeholders.

- Deploy the model online or in dashboards for practical use.

- Revisit and revise the model as needed based on performance and new data.

- Archieve assets:

- Document the data source and processing steps.

- Comment code for analysis, making it future-proof.

- Ensure proper cleanup and archiving of assets for future reference.

- Project Success:

- Following each step on the pathway contributes to project success, making it easier to manage and calculate return on investment.

- The ultimate goal is to gain valuable insights into the business model.

Leave a Reply